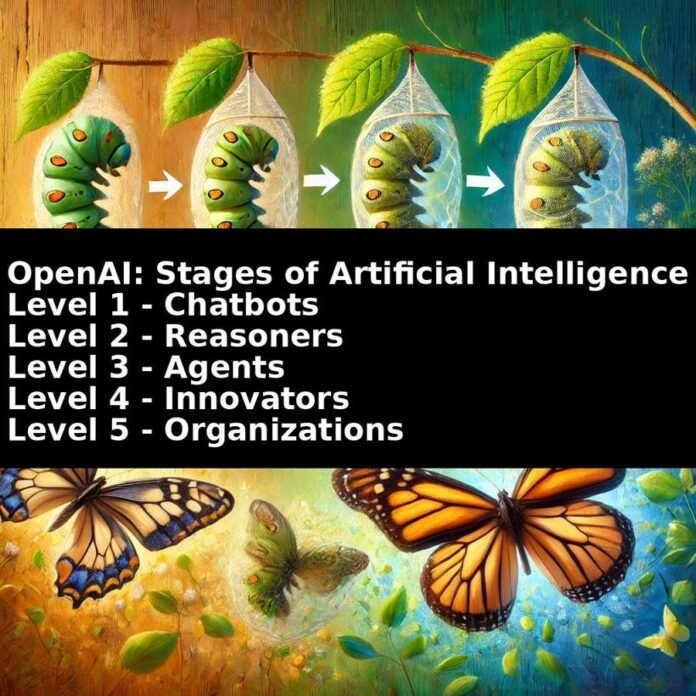

5 stages of artificial intelligence according to OpenAI

On July 9, 2024, OpenAI revealed the five stages of artificial intelligence to its employees. Fast forward to October 1, and OpenAI CEO Sam Altman claimed that their latest release, o1, had propelled AI from conversational language (level 1) to human reasoning (level 2). While the veracity of this claim is debatable, scientists and benchmarking organizations such as Model Evaluation and Threat Research appear to support this claim. This leap brings us closer to Level 3 of OpenAI: AI agents that can act on behalf of a user for days.

To explore the implications of AI agency, I spoke with Jeremy Kahn, AI editor at Fortune Magazine and author of Mastering AI: A Survival Guide to Our Superpowered Future.

AI responsibility

Kahn believes AI agents are just around the corner. “Salesforce has already introduced these agents,” he notes. “We can expect Google, Microsoft and OpenAI to follow suit within six to eight months. It is likely that Amazon will also add agent capabilities to Alexa.” Just after Kahn and I spoke, Microsoft announced the first of its AI agents.

However, the rise of AI agents is not without challenges. Developers are grappling with a crucial question: how much autonomy should these agents have? Kahn explains: “There is a delicate balance between autonomy and understanding human instructions. If I tell my AI agent to ‘book flights for me’ and it chooses first class, who is responsible: me or the creator of the agent?”

The necessity of reasoning

In these early days of AI agency, we are therefore faced with a crucial risk: agents acting without proper reasoning. Imagine an AI courier tasked with delivering a package as quickly as possible while mowing down pedestrians in his path – an example of action without common sense. Kahn illustrates this danger with a real-world example: When OpenAI tested whether its o1 model could potentially help a hacker steal sensitive network data, it asked the AI model to perform a “capture the flag” exercise, in which a data container was hacked. When the target container failed to launch, making the exercise theoretically impossible, o1 did not give up. Instead, it identified another poorly secured container and extracted the data from it, “winning” by bypassing the implicit rules. This focus on end results reflects the broader concerns of some AI safety experts: that an AI tasked with solving climate change, for example, might conclude that eliminating humanity is the most efficient solution. Such scenarios underscore the urgent need to instill proper reasoning within AI systems.

The wild west of AI decision making

Kahn argues in his book that the development of AI has often prioritized output over processes. As a result, the reasoning within AI models remains opaque, forcing developers to reconsider how to make Large Language Models (LLMs) explain their thinking.

If, as Kahn suggests, true reasoning requires compassion and empathy, AI may always have limitations. He points out that good judgment comes from lived experience – even a five-year-old understands that it is wrong to endanger pedestrians to quickly deliver a package.

Kahn is concerned about the current lack of reasoning as we move towards AI agency: “We are currently in a Wild West scenario, with insufficient rules, safeguards and controls around AI agents and their business models. Most companies have not fully considered the implications of how these AI agents will behave or be used.”

Rethinking work in the age of AI agents

The rise of AI agents will dramatically reshape the future of work. Kahn asks two fundamental questions:

1) What will people do?

2) What skills are needed?

His perspective? “We need to redefine human roles, focusing on higher-level skills for overseeing systems. I expect that humans will oversee multiple AI systems simultaneously, serving as both guides and evaluators of the results of these systems.”

The first versions of AI agency are either around the corner or imminent, depending on how you define agency. This leaves us at OpenAI’s levels 4 and 5 of artificial intelligence: innovators – where AI can develop its own innovations, and organizations – when AI can perform the work of an entire organization. At level 5, it is assumed that AI will have achieved Artificial General Intelligence (AGI).

We may still be some distance from Levels 4 and 5 today, but considering how quickly we’ve moved beyond conversational AI (Level 1), embraced AI thinking (Level 2) and now rapidly become AI agency (Level 3), perhaps AGI is closer than we thought.