Apple Intelligence just keeps coming.

The first set of features from Apple’s much-hyped entry into the artificial intelligence boom will be released to the general public sometime next week, but the company is already working on the next one.

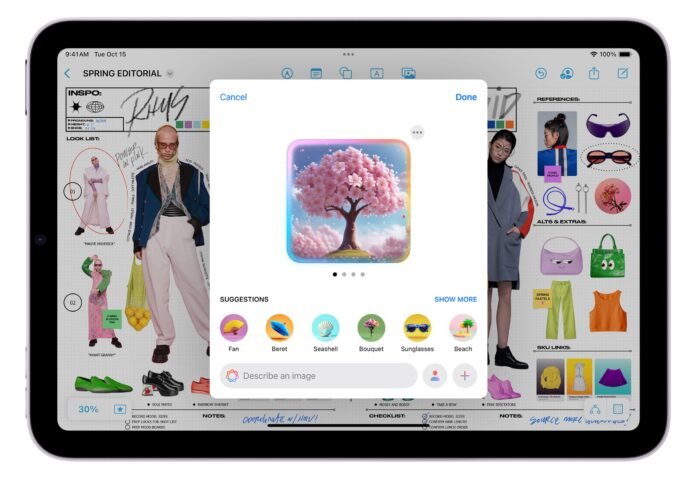

On Wednesday, Apple rolled out developer betas of iOS 18.2, iPadOS 18.2 and macOS 15.2, which include Apple Intelligence features previously only seen in Apple’s own marketing materials and product announcements: three different types of image generation, ChatGPT support, Visual Intelligence, expanded English support and directions for writing aids.

Three types of image generation

Apple’s suite of image-based generative AI tools, including Image Playground, Genmoji and Image Wand, will be in the hands of the public for the first time. When it introduced these features at WWDC in June, Apple said they were intended to create fun and playful images that can be shared with family and friends. This is one of the reasons why the company has eschewed photorealistic image generation and instead opted to use a few different styles it calls “animation” and “illustration.”

Custom emojis with Genmoji offer different options based on a user’s prompt, and allow the resulting images to be sent not only as a sticker, but also inline or even as a tapback. (For example, you could ask for a “rainbow-colored apple” emoji.) You can also create an emoji from the faces in the People section of your Photos library. Creating Genmoji is not yet supported on Mac.

Image Playground is a simple image generator, but with some interesting guardrails. The feature provides concepts you can choose from to start the process, or you can simply type a description of what kind of image you want. Like Genmoji, Image Playground can use people from your photo library to generate images from them. It can also use individual images from Photos to create related images. The images created conform to certain specific, non-photographic styles, such as Pixar-style animation or hand-drawn illustrations.

Image Wand allows users to convert a rough sketch into a more detailed image. It works by selecting the new Image Wand tool from the Apple Pencil tool palette and circling a sketch that needs an AI upgrade. Image Wand can also be used to generate images of entire fabrics, based on the text surrounding them.

Of course, image generation tools open a potential can of worms for creating content that may be inappropriate, a risk that Apple is trying to combat in a number of ways, including limiting the type of material the models are trained on, as well as guardrails . based on what type of prompts are accepted. For example, it specifically filters out attempts to generate images containing nudity, violence, or copyrighted material. In cases where an unexpected or concerning result is generated – a risk with any model of this type – Apple provides a way to report that view directly within the tool itself.

Third-party developers will also have access to APIs for both Genmoji and Image Playground, allowing them to integrate support for those features into their own apps. That’s especially important for Genmoji, because otherwise third-party messaging apps won’t be able to support the custom emoji that users have created.

Give writing aid assignments

The update also adds some more text input, a flair for free association often associated with large language models. For example, Writing Tools, which in the first version of the feature mainly let you tap different buttons to make changes to your text, now has a custom text input field. When you select some text and open Writing Tools, you can tap to enter text to describe what you want Apple Intelligence to do to modify your text. For example, I could have selected this paragraph and then typed “make this funnier.”

In addition to the developer beta, Apple is also introducing a Writing Tools API. That’s important, because while writing tools are available in apps that use Apple’s standard text controls, a number of apps (including some that I use all the time!) use their own custom text editing controls. These apps can use the Writing Tools API and access all Writing Tools features.

Here’s ChatGPT, if you want it

This new set of features also includes connectivity with ChatGPT for the first time. That includes the ability to pass Siri queries to ChatGPT, which will happen dynamically based on the type of query, for example asking Siri to plan a day of activities for you in another city. Users will not only be initially prompted to enable the ChatGPT integration when installing the beta, but also again when prompted. That integration can also be disabled through Settings, or you can choose to have the prompt removed per search. In certain cases, you may receive additional prompts to share specific types of personal data with ChatGPT, for example if your search would also upload a photo.

Apple says that by default, requests sent to ChatGPT are not stored by the service or used for model training, and that your IP address is hidden so different queries cannot be linked together. While a ChatGPT account is not required to use the feature, you can choose to log into a ChatGPT account, which provides more consistent access to specific models and features. Otherwise, ChatGPT decides which model it uses to best respond to the question.

If you’ve ever tried ChatGPT for free, you know that the service has some limitations in terms of the models used and the number of searches you’re allowed to perform in a given time. It’s interesting to note that the use of ChatGPT by Apple Intelligence users is not infinite; if you use it enough you will probably run into usage limitations. However, it’s unclear whether Apple’s deal with ChatGPT means these limits are better for iOS users than for randos on the ChatGPT website. (If you do pay for ChatGPT, you are subject to your ChatGPT account limits.)

Visual intelligence on iPhone 16 models

For owners of iPhone 16 and iPhone 16 Pro models, this beta also includes the Visual Intelligence feature first shown off with the debut of those devices last month. (To activate this, press and hold the Camera Control button to launch Visual Intelligence, then point the camera and press the button again.) Visual Intelligence then looks up information about what the camera is currently seeing, such as opening hours of a restaurant you are standing in front of or event details from a poster, but you can also translate text, scan QR codes, read text aloud and more. It can also optionally use ChatGPT and Google Search to find more information about what it is looking at.

Support for more English dialects

Apple Intelligence debuted with support only for US English, but in the new developer betas that support has become a tiny bit more global. It’s still English only for now, but English speakers in Canada, the United Kingdom, Australia, New Zealand, and South Africa can use Apple Intelligence in their English version. (English language support is coming for India and Singapore, and Apple says support for several other languages will also be coming in 2025, including Chinese, French, German, Italian, Japanese, Korean, Portuguese, Spanish and Vietnamese.)

What’s next?

As part of these developer betas, Apple collects feedback on the performance of its Apple Intelligence features. The company plans to use that feedback not only to improve its tools, but also to gauge when they are ready to be rolled out to a wider audience. We definitely get the feeling that Apple is being as careful as possible here while at the same time heading headlong into its artificial intelligence future. It knows there will be quirks when it comes to AI-based tools, and that makes these beta cycles even more important in terms of shaping the direction of the final product.

Clearly there will be many more developer betas, and eventually public betas, before these .2 releases go to the general public later this year. And there are still a number of announced Apple Intelligence features still to come, especially some essential new Siri features including support for personal context and in-app actions using App Intents. Today marks the next step in Apple Intelligence, but Apple still has a long way to go.–Jason Snell and Dan Moren

If you appreciate articles like this, please support us by becoming a Six Colors subscriber. Subscribers get access to an exclusive podcast, members-only stories and a dedicated community.