Apple created a virtual research environment to give the public access to testing the security of its Private Cloud Compute system, and released the source code for some “key components” to help researchers analyze the privacy and security features of the architecture .

The company is also trying to improve the system’s security and has expanded its security bounty program with rewards of up to $1 million for vulnerabilities that could compromise “PCC’s fundamental security and privacy guarantees.”

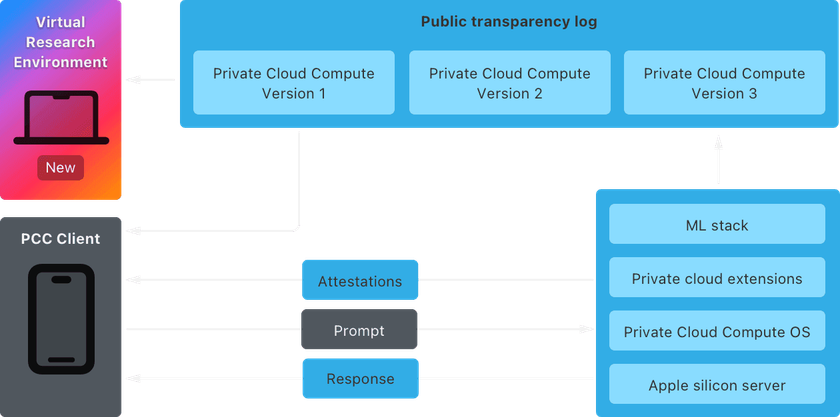

Private Cloud Compute (PCC) is a cloud intelligence system for complex AI processing of data from user devices in a way that does not compromise privacy.

This is achieved through end-to-end encryption, to ensure that personal data from Apple devices sent to PCC is only accessible to the user and not even Apple can detect it.

Shortly after Apple announced PCC, the company gave early access to select security researchers and auditors so they could verify the privacy and security promises for the system.

Virtual research environment

In a blog post today, Apple announced that access to PCC is now public and anyone curious can inspect how it works and check whether it lives up to the promised claims.

The company makes available the Private Cloud Compute Security Guide, which explains the architecture and technical details of the components and how they work.

Apple also offers a Virtual Research Environment (VRE), which replicates the cloud intelligence system locally and allows you to inspect it, test its security, and look for problems.

“The VRE runs the PCC node software on a virtual machine with only minor modifications. Userspace software works similarly to the PCC node, with the boot process and kernel adapted for virtualization,” Apple explains, sharing documentation on how to set up the virtual research environment on your device.

source: Apple

VRE is present on macOS Sequia 15.1 Developer Preview and requires a device with Apple Silicaon and at least 16 GB of unified memory.

The tools available in the virtual environment make it possible to initiate a PCC release in an isolated environment, modify and debug the PCC software for a more thorough investigation, and perform inferences based on demonstrators.

To make things easier for researchers, Apple has decided to release the source code for some PCC components that implement security and privacy requirements:

- The CloudAttestation project – responsible for constructing and validating the PCC node’s attestations.

- The Thimble project includes the private cloud compute daemon that runs on a user’s device and uses CloudAttestation to enforce verifiable transparency.

- The splunkloggingd daemon – filters the logs that can be sent from a PCC node to protect against accidental data disclosure.

- The srd_tools project – contains the VRE tooling and can be used to understand how the VRE enables execution of the PCC code.

Apple is also encouraging research with new PCC categories in its security bounty program for accidental data disclosure, external compromise through user requests, and physical or internal access.

The highest reward is $1 million for a remote attack on request data, allowing remote code execution with arbitrary privileges.

If a researcher shows how to access a user’s request data or sensitive information, they could receive a $250,000 bounty.

Demonstrating the same type of attack, but from the elevated network, requires a payment of between $50,000 and $150,000.

However, Apple says it will consider any issues that have a significant impact on PCC, even if they fall outside the bug bounty program’s categories.

The company believes its “Private Cloud Compute is the most advanced security architecture ever deployed for cloud AI computing at scale,” but still hopes to further improve it in security and privacy with the help of researchers.