AMD is trying to make a dent in the AI graphics card market, but will it be able to eat into Nvidia’s market share?

The artificial intelligence (AI) accelerator market is currently dominated by Nvidia (NVDA -4.69%)which has built a technological lead over rivals and is estimated to control more than 85% of this space.

However, fellow chipmakers, such as Advanced micro devices (AMD -5.22%) And Intelare also trying to get their hands on a share of this lucrative market. AMD recently unveiled a new AI chip – the Instinct MI325X – and claims it’s better than Nvidia’s current flagship H200 processor. However, AMD’s shares fell after the announcement.

Read on to find out why that might be the case and see if AMD’s latest AI chip should be a cause for concern for Nvidia investors.

AMD’s MI325X seems impressive, but perhaps a little too late

AMD points out that its MI325X data center accelerator is equipped with 256 gigabytes (GB) of high-bandwidth HBM3E memory that supports a bandwidth of 6 terabytes (TB) per second. According to AMD, the latest chip has 1.8x more capacity and 1.3x more bandwidth than Nvidia’s flagship H200 processor, which is based on the Hopper architecture.

As a result, AMD claims that the MI325X theoretically offers 1.3x better compute performance, along with an identical advantage in AI inference on the Llama 3.1 and Mistral 7B large language models (LLMs). The company is on track to start production of the MI325X from the current quarter and says it will be widely available in the first quarter of 2025.

Considering that Nvidia’s H200 processor offers 141GB of HBM3E memory with a bandwidth of 4.8TB/second, there’s no doubt that AMD’s new AI chip is superior on paper. But at the same time, investors should note that the H200 was announced almost a year ago and will hit the market in the second quarter of 2024. AMD’s chip will therefore come onto the market about nine months later than the H200.

On the other hand, Nvidia will be upping its game in the AI chip market with its next-generation Blackwell processors. The upcoming B200 Blackwell graphics processing unit (GPU) is expected to feature 208 billion transistors, compared to the 153 billion transistors on the MI325X. More importantly, the B200 is reportedly manufactured using Taiwanese semiconductor manufacturing‘s 4-nanometer (nm) 4NP process node, compared to the 5-nm process node for the MI325X.

Nvidia will pack a much larger number of transistors into a much smaller chip, meaning the B200 processor will likely have more computing power while being more energy efficient. Additionally, the B200 will reportedly offer a higher memory bandwidth of 8TB/second. The good part is that Nvidia expects to “achieve several billion dollars in Blackwell revenue” in the fourth quarter of the current fiscal year, which runs from November 2024 to January 2025.

What this means is that Nvidia would have had a generational lead over AMD by the time the latter’s latest chip is widely available. As a result, there doesn’t appear to be a strong reason for Nvidia investors to be wary of AMD’s latest offerings. However, there appears to be a silver lining for AMD investors.

The new chip may not beat Nvidia, but it could give AMD a nice boost

Nvidia is running away with the AI chip market and appears on track to generate nearly $100 billion in data center revenue in the current fiscal year. AMD, on the other hand, expects GPU revenue from AI data centers to reach $4.5 billion by 2024, which pales in comparison to Nvidia’s potential revenue from this segment.

However, AMD doesn’t need to beat Nvidia in AI GPUs to boost its growth. It simply needs to become the second-largest player in this market, which AMD thinks could be worth as much as $500 billion by 2028. The company started selling its AI GPUs in late 2023 and sold $6.5 billion worth of data center chips last year. , including the Epyc server processors.

This year, the company has already generated $5.2 billion in data center revenue in the first six months of 2024, which is almost double the data center revenue in the same period last year. At this run rate, AMD could generate nearly $10.5 billion in data center revenue this year, of which $4.5 billion will come from sales of AI GPUs. Assuming AMD can capture even 10% of the AI GPU market by 2028, it could generate $50 billion in annual revenue from this segment, which would be a big jump from this year’s projections.

Furthermore, AMD’s MI325X processor could witness strong demand as Nvidia noted during its previous earnings conference call that the H200 processors are expected to continue to sell well despite the arrival of the new Blackwell chips. More specifically, Nvidia estimates that shipments of Hopper-based chips such as the H200 will increase in the second half of the current fiscal year, suggesting there is room for the MI325X despite the arrival of more powerful processors.

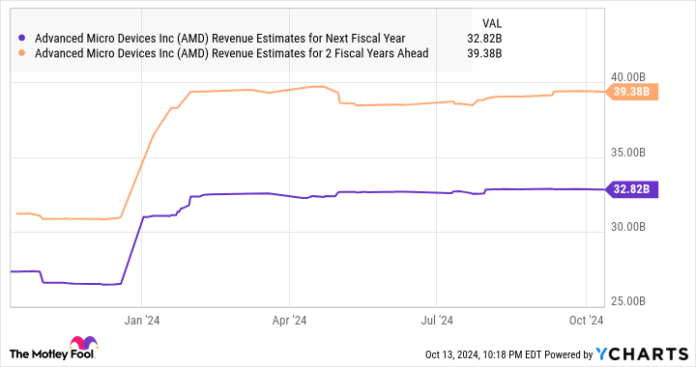

Moreover, AMD’s growing presence in the AI GPU market is expected to drive growth starting next year. While the company’s revenue is expected to rise 13% to $25.6 billion by 2024, forecasts for the coming years appear to be even better.

AMD revenue estimates for next fiscal year data according to YCharts.

Even if AMD can’t beat Nvidia in the AI chip market, it could prove to be a solid AI stock in the long run if it manages to carve out a small niche for itself. At the same time, investors should keep in mind that AMD can benefit from other AI-related applications, as well as in the form of AI-enabled PCs and server processors.

Even though AMD stock has posted a tepid gain of just 14% through 2024, investors would do well to keep the stock on their watchlist as it could rise impressively, thanks to the massive opportunity in AI GPUs and other markets.

Harsh Chauhan has no position in any of the stocks mentioned. The Motley Fool holds positions in and recommends Advanced Micro Devices, Nvidia, and Taiwan Semiconductor Manufacturing. The Motley Fool recommends Intel and recommends the following options: Short November 2024 $24 Calls on Intel. The Motley Fool has a disclosure policy.